Chapter 16 Stacking models

Boosting or bagging combines a series of models in ensembles to achieve a model performance of the ensemble that is better than the performance of individual models. Prominent examples are RandomForest or xgboost. In all of these cases, the individual models are of the same type, e.g. decision trees (see 27.10, 27.11, and 27.12).

Stacking is a similar concept that goes back to Wolpert (Wolpert 1992) who introduced the idea of stacked generalizations. A set of base models is combined using a meta-model that is trained to find the optimal combination of the base models. The models can (and should) be highly divers.

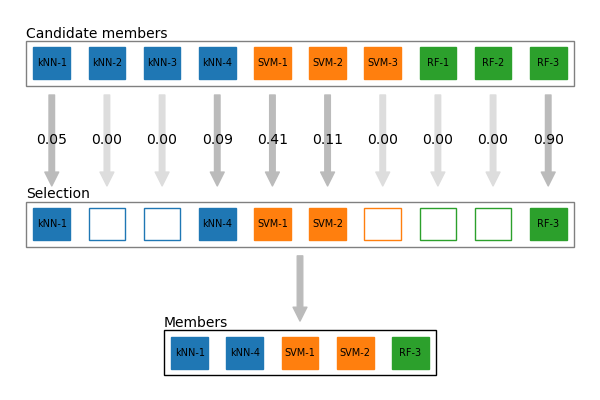

Figure 16.1: Concept of stacking Models

Figure 16.1 shows the concept of stacking. The base models are trained on the training data. The predictions of the base models are then used as input for the meta-model. The meta-model is trained on the training data and the predictions of the base models. The meta-model then combines the predictions of the base models to make the final prediction. Ideally, the meta-model uses some form of constraints that leads to selection of a subset of the candidate members.

There are many ways to implement stacking. In the tidymodels ecosystem, stacking is available using the stacks package. The stacks package uses L1 regularization to select the best combination of base models.

Todo:

Read the articles on https://stacks.tidymodels.org/ to learn how to use the stacks package for

- regression and

- classification.